The traditional Unix syslogd only allows logging to a specific file, such as /var/log/messages. To avoid filling disks, log files are usually rotated with a utility such as logrotate(8) or newsyslog(8).

However, this rotation method creates new problems, such as edge cases where logs may be lost, poor behavior when the log rotation fails or system crashes, and needless complication for backup systems. These problems can be eliminated by instead logging into time-based log files (such as log.%Y-%m-%d-%H), instead of using the problematic “hindsight rotation” scheme. syslog-ng, for example, offers logging to filename templates, as do other programs. A less ideal scheme—but still far better than the hindsight conveyer belt method—entails logging to a file based on the startup time of the process.

While some feel the size-based log rotation of conveyer belt schemes is a benefit, there is no reason why a time bucket system cannot also keep trace of file size. Also, if size limits are being exceeded, why? Are useless debug logs flooding the disk? Has proper care and attention been paid to how many logs a system or service will generate, as scaled for the expected traffic, for the lifetime the logs must be retained, with appropriate room for growth? Sadly, logging is often left as an afterthought, and logs end up strewn with entries that only waste space.

Problems with Hindsight Rotation Schemes

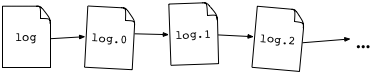

logrotate and newsyslog implementations increment numbers in the names of all the previous log files, and then save the current log to log.0 or sometimes instead log.1, depending on the whim of the rotation implementer. Older log files eventually fall off the end of the conveyer belt after a certain number of rotations. This method creates needless problems and suffers from various design flaws:

Send Signal and Hope

The usual manner of hindsight conveyer belt log file rotation involves a needless extra process—logrotate, for example—issuing a rename(2) call, and some non-zero number of milliseconds—or longer, on a busy system—a signal to the logging process, indicating that the process should carry out the multiple system calls necessary to reopen the log file. Assuming the logging process is able to catch and handle the signal, all will be well. If not, logging will continue to the log.0 rotated file. This is at best a nuisance, perhaps delaying incident resolution, if the on-call cannot immediately find the current logs in the expected log file. At worst, the rotated log will eventually be unlinked when it falls off the rename(2) conveyer belt, while the logging still continues to the file handle opened against the now unlinked inode. This may, given time, fill up the disk, or result in lost logs should the process exit or the system restart. On some systems, /proc tricks can recover data from a unlinked-but-held-open file. A better system would not utilize the external logrotate program, and instead have the logging program handle log rotation itself. This avoids the need for signal handling, thus simplifying the logging code. A parent process could either pipe output to a program such as httplog, or use a software library that supports logfile rotation, such as log4perl.

Some programs have no mechanism to reopen their log files. In these cases, logrotate offers a copy and truncate kluge to solve the problem. This method should not be used, especially for critical logs, as any log messages written by the logging program between the copy and truncate operations will be silently discarded. As above, these programs will be better handled by piping the output to a log handling program that can provide better control over where the logs go.

Pointlessly Complicated Backups

Backup utilities or file synchronization software such as rsync will again (and again, and again…) backup all the log files following each rotation, as the renames will have changed the contents of all the named log files. Worse, rsync in particular will see the same filenames (log, log.0, … log.N), but must scan through every file on both sides of the copy each time, looking for differences between the utterly different files! Reprogramming the backup software to be aware of the rotation policy might be possible, but why waste time worrying about this design flaw? If the log files are instead saved out into time based templates, rsync will easily find and backup only the differences.

The same benefit applies to processing older logs for data: in a sliding conveyer belt scheme, the mining software would need to checksum the contents of each log, then compare the checksum of the random log.N against a table of prior checksums to distinguish seen logs from unseen logs. Time-bucket based logging, on the other hand, offers the filename as a unique identifier of whether a file has been seen, a much simpler and less computationally expensive means to determine whether a file has been processed. While some hindsight log rotation solutions offer the ability to run commands at the time of rotation, this will increase system load at the time of rotation. An unseen file method, on the other hand, can run whenever is most suitable.

Needlessly Hard to Reference Logs

Between daily versus weekly rotation schedules, early morning log file rotation times, and other implementation differences, tracking down an old log file entry is a time consuming task, as one must blast away with grep log.* against potentially gigabytes of log data. If, instead, a time bucket log scheme were used, the location of old logs would always reside in the same file, narrowing the scope of any grep required. Using conveyer belt rotation, a log entry from two days ago might be found in:

- log - weekly rotation, not yet rotated. Depends on the day of the week.

- log.1 - weekly rotation, on certain days of the week.

- log.0 - under a different log rotation implementation…

- log.2 - daily rotation, via previous log.1 rotation, or log.1, if the first file was log.0.

- log - rotation, except the system crashed over the holiday weekend, and now the rotation is several days out of sync, or perhaps logrotate failed to run—and how would you know logrotated had been failing?

In other words, there is no easy way to calculate where a specific log will be located at any point in time under traditional log file rotation, without knowing the particulars of the log rotation implementation and configuration, and whether the system has been running or log rotation actually working. grep or other solutions must paw through logfile after logfile in search of data.

The simpler time bucket based solution offers programatic access to the logs from two days ago: knowing the current date, subtract two days. Knowing the time bucket pattern, convert the time stamp of two days ago with strftime(3). Done. The bucket method also completely avoids the logrotate not running issue, as either there will be logs (easy to detect), or no logs, as the logging program is not running (again, easy to detect).

Solutions

One solution: ensure the logging daemon—such as syslog-ng—rotates the log files itself, or better yet, writes to log files that do not need conveyer belt rotation:

destination everything {

file("/var/log/archive/$R_YEAR/$R_MONTH/$R_YEAR-$R_MONTH-$R_DAY"

template("$ISODATE <$FACILITY.$PRIORITY> $HOST $MSG\n")

template_escape(no)

);

};

Another solution: httplog. This utility can rotate logs into time and hostname based files, and maintain a symbolic link to the current logfile. For example, to rotate output from a shell command with a new logfile created each minute:

#!/bin/sh

HTTPLOG=httplog

# Must use fully qualified path, otherwise httplog mkdirs() fails.

# Do not use /tmp or /var/tmp on production systems!

HTTPLOG_ARGS="-s /tmp/log.current /tmp/%Y/%m/%d/%Y-%m-%dT%H:%M"

(

while sleep 5; do

date

done

) | $HTTPLOG $HTTPLOG_ARGS

Startup time logging schemes are less ideal than dividing logs into time based buckets: programmatic access to a log entry of a particular time is possible, but more difficult than under a time bucket scheme, as one cannot simply convert a time stamp to a filename directly with strftime(3). However, given the choice of a logrotate scheme, I would first recommend time based buckets, second a startup time scheme, and never hindsight conveyer belt rotation.